SiLC: Machine Vision

January 1, 2024

(This article has been updated.)

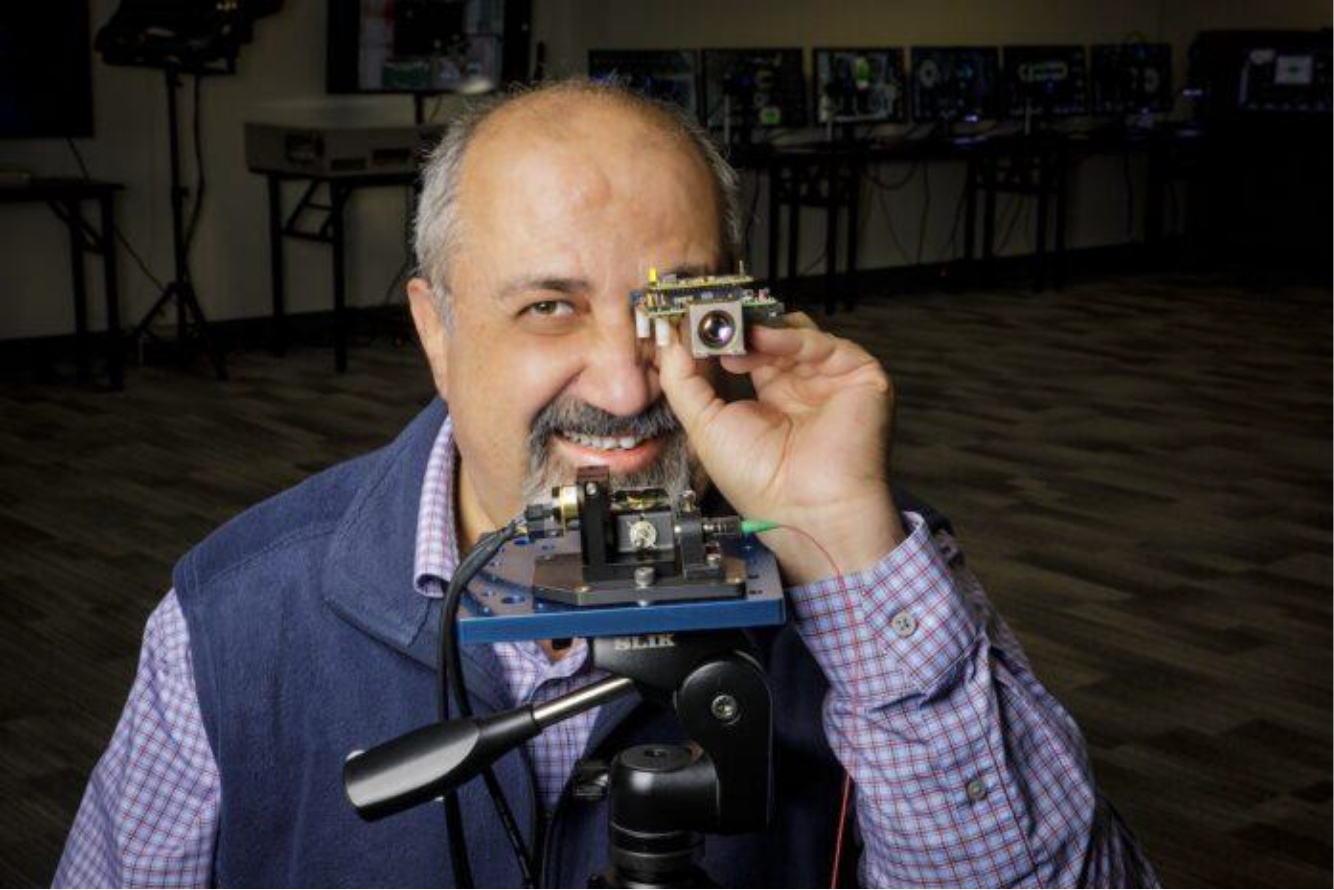

Decades ago, engineers such as Mehdi Asghari helped develop the integrated circuits that power the world today.

Using silicon, these circuits – colloquially called microchips – have contributed to vast technological advancements in electronics thanks to their ability to cram billions of circuits and transistors on smaller and smaller boards. Now, Asghari wants to do the same, but with photons – which transmit light instead of electricity.

“Thirty years ago, these things would have been the size of a football pitch (a soccer field). Now it’s in a cellphone,” said Asghari, founder and chief executive of SiLC Technologies, which is based in Monrovia. “That’s for electrons, what carries a current. What we’re trying to do is do the same thing for photons. We’re trying to achieve the same breakthrough for photons.”

He is working on so-called machine vision, which interprets images generated by photons carrying light; it allows mechanized things to react to their environment – say, automated vacuums, collision sensors on vehicles and delivery robots. The company in December announced a breakthrough: its Eyeonic Vision Sensor chip detected and processed images from a distance of 2 kilometers, or about 1 1/4 miles. It did so in the wake of nearly doubling its fundraising. It is now targeting 10 kilometers.

This, Asghari explained, is in the service of advancing robotics from simple machines that perform repeated precise tasks to ones able to interpret their environment and perform independently.

“These are not things that a robot can easily do. The robot didn’t even need to see to do work at first,” he said. “We are trying to replace the nonexistent workers (due to staffing shortages), and in order to do that the robots need to see the world.”

Microchips, for vision

Founded in 2018, SiLC has been where Asghari assembled a team to advance machine vision to higher and higher levels.

The company has 50 employees, 40 of whom have graduate-level degrees in their fields. Twenty have their doctorates, Asghari pointed out.

The quest to improving machine vision goes further than making a better camera, Asghari explained. To be able to achieve his goal of replicating what the human eye does, SiLC needs to create a system that is able to interpret what it sees – that is, the system needs to be able to calculate depth and velocity, the things that will help an AI-enhanced machine interact with its environment. The human eye inspires this approach because most of the information it processes is actually velocity and depth, rather than color, Asghari said.

“Motion is key to our vision, and it has been critical to our survival. If a tiger is jumping at you, you run away before you even process the image. In sports, you don’t even see the ball in tennis, but you know the motion is there,” he explained. “These motion sensers in the human eye are directly linked to your muscles, and that enables us to have very fast reactions. These are capabilities that machines don’t have.” Part of this equation is using lidar – light detection and ranging – instead of radar, for its strength in 3D modeling. This helps a machine process range, depth and velocity.

“Making an eye for robots is quite complicated. The human eye is probably the most complex optical device in existence, so trying to mimic that is complicated,” Asghari said. “In order to make such complicated equipment in a tiny thing that can now be put in a robot or one day in your cellphone, we have to integrate these components into a silicon chip.”

Population shrinkage

In taking machine vision technology and minimizing it to microchip sizes, Asghari and his company hope to solve another problem facing many societies: shrinking population growth.

“One of the big problems that we have today is that we as humans are not having kids anymore. These days, the average family would dare to have two kids,” he said.

“Industries that need people to do physical work, they just can’t find workers anymore. The working-age population is (smaller), and this has been going on for years. But after Covid, it really started hitting people.”

In other words, more and more industries are going to be deploying robots, machines and devices that rely on precise vision to take on these jobs. And SiLC is designing the chips that could facilitate that.

SiLC raised $25 million in new funding in November, which, combined with its seed and Series A round, makes for a total of $56 million raised. Sources of funding include Dell Technologies Capital, ROHM Semiconductor, Hankook USA, Yamato Transport and UMC Capital. Asghari said SiLC, with the current funding, has a runway through the end of the year.

Target markets

As SiLC develops its chips, it is also seeking a variety of commercial markets for its products. And while it is not expressly looking for a military application for such technology, Asghari acknowledged there would inevitably be dual-use products that make their way into defense contracts.

“As the capabilities of generative AI get better and more mature all the time, having additional input devices like machine vision are going to create entire new-use cases and new industries, potentially,” said Erik Krogh, chair of the business analytics program at Pepperdine Graziadio Business School. “The economic need to replace some humans with robots is pretty important.”

Asghari said SiLC has strong interest from rail and aviation clients because of the need to anticipate potential collisions. Heavy trains have long braking distances and jet-powered planes travel so fast their windows to maneuver are short – so a head start of a kilometer or so is vital information for their operators.

On a smaller scale, Asghari said he hopes to supplant current cellphone cameras, as well as other sorts of cameras. In film production, camera technology can potentially use stronger machine vision to take the place of bulky, cumbersome motion-capture equipment.

Athletic trainers, for another example, might use the mapping abilities of SiLC products to analyze and evaluate a person’s motions and velocity, to correct form or diagnose an issue.

“Because we can analyze motion and velocity, we can collect a lot of statistics that are very hard to get,” Asghari said. “We’re going to enable that to become a camera that any school coach can purchase and put on a tripod next to a game.”

Krogh of Pepperdine is researching an even more novel use of machine vision: implementing it in robots designed to interact with and anticipate the movements of people with dementia. This project relies on two different uses of machine vision, he said.

“The first one is measuring a person’s gait, and we’re pretty far along with that,” Krogh said, explaining that such a robot could prevent falls. “Using lidar and machine vision to assess what’s going on is one of the first deliverables in this project, but what we ultimately want to do is use machine vision to study faces and assess when a person is becoming agitated, because that is one of the main precursors to when a person is about to fall.”

Asghari said SiLC has multiple business verticals – including security, industrial and robotics, and smart infrastructure – each with one or two major customers. Asghari said the company plans to seek additional companies as manufacturing capacity increases. SiLC’s current facility has limited manufacturing capacity, to the tune of hundreds of mostly prototype units; the company recently signed a lease to take over a building next-door to that facility and aims to increase its manufacturing capacity to thousands of units at a time.

And Asghari said there are potential locations in the Bay Area and Japan in the future for research and development or production.